쿠버네티스 컨트롤플레인을 수동으로 설치하는 방법을 통해 부트스트랩과정을 이해하고 배워보고자한다.

참고 : https://github.com/kelseyhightower/kubernetes-the-hard-way/tree/master/docs

4개의 라즈베리파이 중 2개를 마스터 노드로 활용하여 컨트롤플레인을 구축할 것이고, 나머지 2대는 워커 노드로서 간단한 웹 페이지를 띄우고 정상 동작하는 지를 확인하려한다.

Infrastructure Setting

Raspberry Pi cluster setup

- 4pc 라즈베리파이3 B+ 모델

- 4pc 16 GB SD card

- 4pc ethernet cables

- 1pc 스위치 허브

- 1pc USB power hub

- 4pc Micro-USB cables

Cluster Static IP

| Host Name | IP |

| k8s-master-1 | 172.30.1.40 |

| k8s-master-2 | 172.30.1.41 |

| k8s-worker-1 | 172.30.1.42 |

| k8s-worker-2 | 172.30.1.43 |

1. Infrastructure

우분터 서버 이미지는 기본적으로 ssh를 내장하고 있다.

Default user/pwd : ubuntu/ubuntu

- hostname 변경

sudo hostnamectl set-hostname xxx- static ip 설정

vi /etc/netplan/xxx.yaml

network:

ethernets:

eth0:

#dhcp4: true

#optional: true

addresses: [192.168.10.x/24]

gateway4: 192.168.10.1

nameservers:

addresses: [8.8.8.8]

version: 2

sudo netplan apply- /etc/hosts

172.30.1.40 k8s-master-1

172.30.1.41 k8s-master-2

172.30.1.42 k8s-worker-1

172.30.1.43 k8s-worker-2- /boot/firmware/nobtcmd.txt

아래 내용 추가

cgroup_enable=cpuset cgroup_enable=memory cgroup_memory=12. Generating an CA and Certificates for k8s cluster(PKI certificates)

- Certificate Authority

Client Certificates

- Admin Client

- Kubelet Client

- Kube Proxy Client

- Controller Manager Client

- Service Account key pair : Kube controller manager uses a key pair to generate and sign service account tokens

- Scheduler Client

Server Certificates

- API Server

install cfssl on Mac to provision a PKI Infrastructure

- brew install cfssl

# Certificate Authority

참고 : https://kubernetes.io/ko/docs/tasks/administer-cluster/certificates/

Write an ca-config.json

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}

Create a CSR for your new CA(ca-csr.json)

- CN : the name of the user

- C : country

- L : city

- O : the group that this user will belong to

- OU : organization unit

- ST : state

{

"CN": "Kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "Kubernetes",

"OU": "CA",

"ST": "Seoul"

}

]

}

Initialize a CA

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

>> ca-key.pem, ca.csr, ca.pem

# Admin Client Certificate

Write admin-csr.json

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "system:masters",

"OU": "Kubernetes the Hard Way",

"ST": "Seoul"

}

]

}

Generate an Admin Client Certificate

- client certificate for the kubernetes admin user

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare admin

>> admin-key.pem, admin.csr, admin.pem

# Kubelet Client Certificate

Write k8s-node-x-csr.json

- Kubernetes uses a special-purpose authorization mode called Node Authorizer, that specifically authorizes API request made by Kubelets. In order to be authorized by the Node Authorizer, Kubelets must use a credential that identifies them as being in the system:nodes group, with a username of system:node:<nodeName>

{

"CN": "system:node:k8s-worker-1",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "system:nodes",

"OU": "Kubernetes The Hard Way",

"ST": "Seoul"

}

]

}

Generate an Kubelet Client Certificate for each Worker Node

export WORKER_IP=172.30.1.42

export WORKER_HOST=k8s-worker-1

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=${WORKER_IP},${WORKER_HOST} \

-profile=kubernetes \

${WORKER_HOST}-csr.json | cfssljson -bare ${WORKER_HOST}

>> k8s-worker-1-key.pem, k8s-worker-1.csr, k8s-worker-1.pem

# Kube Proxy Client Certificate

Write kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "system:node-proxier",

"OU": "Kubernetes the Hard Way",

"ST": "Seoul"

}

]

}

Generate Kube Proxy Client certificate

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare kube-proxy

>> kube-proxy.csr, kube-proxy.pem, kube-proxy-key.pem

# Controller Manager Client Certificate

Write kube-controller-manager-csr.json

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "system:kube-controller-manager",

"OU": "Kubernetes the Hard Way",

"ST": "Seoul"

}

]

}Generate Controller Manager Client Certificate

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

>> kube-controller-manager-csr, kube-controller-manager.pem, kube-controller-manager-key.pem

# Service Account Key Pair

Write service-account-csr.json

{

"CN": "service-accounts",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "Kubernetes",

"OU": "Kubernetes the Hard Way",

"ST": "Seoul"

}

]

}

Generate Service Account Key pair

- The Kubernetes Controller Manager leverages a key pair to generate and sign service account tokens(https://kubernetes.io/docs/reference/access-authn-authz/service-accounts-admin/)

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

service-account-csr.json | cfssljson -bare service-account

>> service-account.csr, service-account.pem, service-account-key.pem

# Scheduler Client Certificate

Write kube-scheduler-csr.json

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "system:kube-scheduler",

"OU": "Kubernetes the Hard Way",

"ST": "Seoul"

}

]

}

Generate Kube Scheduler Client certificate

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-scheduler-csr.json | cfssljson -bare kube-scheduler

>> kube-scheduler.csr, kube-scheduler.pem, kube-scheduler-key.pem

# Kubernetes API Server Certificate

Write kubernetes-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "South Korea",

"L": "Seoul",

"O": "Kubernetes",

"OU": "Kubernetes the Hard Way",

"ST": "Seoul"

}

]

}

Generate Kubernetes API Server certificate

- The Kubernetes API server is automatically assigned the kubernetes internal dns name, which will be linked to the first IP address(10.32.0.1) from the address range(10.32.0.0/24) reserved for internal cluster services during the control plane bootstrapping.

- Master node IP address will be included in the list of subject alternative names for the Kubernetes API Server certificate. This will ensure the certificate can be validated by remote clients.

- LB 사용시에 CERT_HOSTNAME에 LB 호스트명과 IP도 추가한다.

CERT_HOSTNAME=10.32.0.1,172.30.1.40,k8s-master-1,172.30.1.41,k8s-master-2,127.0.0.1,localhost,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.svc.cluster.local

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=${CERT_HOSTNAME} \

-profile=kubernetes \

kubernetes-csr.json | cfssljson -bare kubernetes

>> kubernetes.csr, kubernetes.pem, kubernetes-key.pem3. Generating Kubernetes Configuration Files for Authentication

kubeconfigs enable k8s clients to locate and authenticate to the kubernetes api servers.

Worker nodes need

- ${worker_node}.kubeconfig (for Kubelet)

- kube-proxy.kubeconfig (for Kube-proxy)

# Generate Kubelet-kubeconfig

- When generating kubeconfig files for kubelets the client certificate matching the kubelet's node name must be used. This will ensure kubelets are properly authorized bye the kubernetes Node Authorizer.

- KUBERNETES_ADDRESS 를 마스터 노드의 IP로 설정하였지만 앞단에 LB를 두는 경우 LB의 IP를 지정.

KUBERNETES_ADDRESS=172.30.1.40

INSTANCE=k8s-worker-2

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_ADDRESS}:6443 \

--kubeconfig=${INSTANCE}.kubeconfig

kubectl config set-credentials system:node:${INSTANCE} \

--client-certificate=${INSTANCE}.pem \

--client-key=${INSTANCE}-key.pem \

--embed-certs=true \

--kubeconfig=${INSTANCE}.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:node:${INSTANCE} \

--kubeconfig=${INSTANCE}.kubeconfig

kubectl config use-context default --kubeconfig=${INSTANCE}.kubeconfig

>> k8s-worker-1.kubeconfig, k8s-worker-2.kubeconfig

# Generate kubeproxy-kubeconfig

KUBERNETES_ADDRESS=172.30.1.40

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_ADDRESS}:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials system:kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

>> kube-proxy.kubeconfig

Master nodes needs

- admin.kubeconfig (for user admin)

- kube-controller-manager.kubeconfig (for kube-controller-manager)

- kube-scheduler.kubeconfig (for kube-scheduler)

# Generate admin.kubeconfig

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=admin.kubeconfig

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=admin \

--kubeconfig=admin.kubeconfig

kubectl config use-context default --kubeconfig=admin.kubeconfig

>> admin.kubeconfig

# Generate kube-controller-manager-kubeconfig

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

>> kube-controller-manager.kubeconfig

# Generate kubescheduler-kubeconfig

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

>> kube-scheduler.kubeconfig

4. Generating the Data Encryption Config and Key(Encrypt)

- Kubernetes stores a variety of data including cluster state, application configurations, and secrets. Kubernetes supports the ability to encrypt cluster data at rest.

- Generate an encryption key and an encryption config suitable for encrypting Kubernetes Secrets.

ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

cat > encryption-config.yaml << EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF5. Distribute the Client and Server Certificates

Copy certificate to worker nodes

sudo scp ca.pem k8s-worker-1-key.pem k8s-worker-1.pem k8s-worker-1.kubeconfig kube-proxy.kubeconfig pi@172.30.1.42:~/

sudo scp ca.pem k8s-worker-2-key.pem k8s-worker-2.pem k8s-worker-2.kubeconfig kube-proxy.kubeconfig pi@172.30.1.43:~/

Copy certificates to master nodes

sudo scp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem service-account-key.pem service-account.pem encryption-config.yaml kube-controller-manager.kubeconfig kube-scheduler.kubeconfig admin.kubeconfig encryption-config.yaml pi@172.30.1.40:~/

sudo scp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem service-account-key.pem service-account.pem encryption-config.yaml kube-controller-manager.kubeconfig kube-scheduler.kubeconfig admin.kubeconfig encryption-config.yaml pi@172.30.1.41:~/

6. Bootstrapping the ETCD cluster

Kubernetes components are stateless and store cluster state in etcd.

# Download and Install the etcd binaries

wget -q --show-progress --https-only --timestamping "https://github.com/etcd-io/etcd/releases/download/v3.4.15/etcd-v3.4.15-linux-arm64.tar.gz"

tar -xvf etcd-v3.4.15-linux-arm64.tar.gz

sudo mv etcd-v3.4.15-linux-arm64/etcd* /usr/local/bin/

---

wget https://raw.githubusercontent.com/robertojrojas/kubernetes-the-hard-way-raspberry-pi/master/etcd/etcd-3.1.5-arm.tar.gz

tar -xvf etcd-3.1.5-arm.tar.gz

# Certs to their desired location

sudo mkdir -p /etc/etcd /var/lib/etcd

sudo chmod 700 /var/lib/etcd

sudo cp ca.pem kubernetes-key.pem kubernetes.pem /etc/etcd/

# Create the etcd.service systemd unit file

주요 파라미터

- initial-advertise-peer-urls

- listen-peer-urls

- listen-client-urls

- advertise-client-urls

- initial-cluster

ETCD_NAME=k8s-master-1

INTERNAL_IP=172.30.1.40

INITIAL_CLUSTER=k8s-master-1=https://172.30.1.40:2380,k8s-master-2=https://172.30.1.41:2380cat << EOF | sudo tee /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/kubernetes.pem \\

--key-file=/etc/etcd/kubernetes-key.pem \\

--peer-cert-file=/etc/etcd/kubernetes.pem \\

--peer-key-file=/etc/etcd/kubernetes-key.pem \\

--trusted-ca-file=/etc/etcd/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster-0 \\

--initial-cluster ${INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

Environment="ETCD_UNSUPPORTED_ARCH=arm64"

[Install]

WantedBy=multi-user.target

EOF

# Strat etcd

sudo systemctl daemon-reload

sudo systemctl enable etcd

sudo systemctl start etcd

sudo systemctl status etcd

# Verification

sudo ETCDCTL_API=3 etcdctl member list \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem

7. Bootstrapping the Kubernetes Control Plane

The following components will be installed on each master node : Kubernetes API Server, Scheduler and Controller Manager

Kubernetes API Server

# Download and move them to the right position

sudo mkdir -p /etc/kubernetes/configwget -q --show-progress --https-only --timestamping \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kube-apiserver" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kube-controller-manager" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kube-scheduler" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kubectl"

chmod +x kube-apiserver kube-controller-manager kube-scheduler kubectl

sudo mv kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/# Certs to their desired location

sudo mkdir -p /var/lib/kubernetes/

sudo cp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

encryption-config.yaml /var/lib/kubernetes/# Create the kube.apiserver systemd unit file

주요 파라미터

- advertise-address : ${INTERNAL_IP}

- apiserver-count : 2

- etcd-servers : ${CONTROLLER0_IP}:2379,${CONTROLLER1_IP}:2379

- service-account-issuer : https://${KUBERNETES_PUBLIC_ADDRESS}:6443

- service-cluster-ip-range : 10.32.0.0/24

INTERNAL_IP=172.30.1.40

KUBERNETES_PUBLIC_ADDRESS=172.30.1.40

CONTROLLER0_IP=172.30.1.40

CONTROLLER1_IP=172.30.1.41

cat << EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${INTERNAL_IP} \\

--allow-privileged=true \\

--apiserver-count=2 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/var/lib/kubernetes/ca.pem \\

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--etcd-cafile=/var/lib/kubernetes/ca.pem \\

--etcd-certfile=/var/lib/kubernetes/kubernetes.pem \\

--etcd-keyfile=/var/lib/kubernetes/kubernetes-key.pem \\

--etcd-servers=https://${CONTROLLER0_IP}:2379,https://${CONTROLLER1_IP}:2379 \\

--event-ttl=1h \\

--encryption-provider-config=/var/lib/kubernetes/encryption-config.yaml \\

--kubelet-certificate-authority=/var/lib/kubernetes/ca.pem \\

--kubelet-client-certificate=/var/lib/kubernetes/kubernetes.pem \\

--kubelet-client-key=/var/lib/kubernetes/kubernetes-key.pem \\

--runtime-config='api/all=true' \\

--service-account-key-file=/var/lib/kubernetes/service-account.pem \\

--service-account-signing-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-account-issuer=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \\

--service-cluster-ip-range=10.32.0.0/24 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/var/lib/kubernetes/kubernetes.pem \\

--tls-private-key-file=/var/lib/kubernetes/kubernetes-key.pem \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start kube-apiserver

sudo systemctl daemon-reload

sudo systemctl enable kube-apiserver

sudo systemctl start kube-apiserver

sudo systemctl status kube-apiserverController Manager

# Certs to their desired location

sudo cp kube-controller-manager.kubeconfig /var/lib/kubernetes/# Create the kube-controller-manager systemd unit file

주요 파라미터

- cluster-cidr : 10.200.0.0/16

- service-cluster-ip-range : 10.32.0.0/24

cat << EOF | sudo tee /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--address=0.0.0.0 \\

--cluster-cidr=10.200.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \\

--cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \\

--kubeconfig=/var/lib/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--root-ca-file=/var/lib/kubernetes/ca.pem \\

--service-account-private-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--use-service-account-credentials=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start kube-controller-manager

sudo systemctl daemon-reload

sudo systemctl enable kube-controller-manager

sudo systemctl start kube-controller-manager

sudo systemctl status kube-controller-manager

Kube Scheduler

# Certs to their desired location

sudo cp kube-scheduler.kubeconfig /var/lib/kubernetes/# Create the kube-scheduler systemd unit file

cat << EOF | sudo tee /etc/kubernetes/config/kube-scheduler.yaml

apiVersion: kubescheduler.config.k8s.io/v1beta1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: "/var/lib/kubernetes/kube-scheduler.kubeconfig"

leaderElection:

leaderElect: true

EOF

cat << EOF | sudo tee /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--config=/etc/kubernetes/config/kube-scheduler.yaml \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start kube-scheduler

sudo systemctl daemon-reload

sudo systemctl enable kube-scheduler

sudo systemctl start kube-scheduler

sudo systemctl status kube-schedulerRBAC for Kubelet Authoriztion

- kube-apiserver가 worker node에 대한 정보를 요청 권한을 가지도록

- Access to tke kubelet API is requried for retrieving metrics, logs and executing commands in pods.

# Create an Admin ClusterRole and bind it to kubeconfig

ClusterRole

cat << EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

EOFClusterRoleBinding

cat << EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

# Verify All component working properly

kubectl get componentstatuses --kubeconfig admin.kubeconfig

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

(Optional) Enable HTTP Health Checks

# Install nginx and expose health check over http

sudo apt-get update

sudo apt-get install -y nginx

# Config proxying health check

cat > kubernetes.default.svc.cluster.local <<EOF

server {

listen 80;

server_name kubernetes.default.svc.cluster.local;

location /healthz {

proxy_pass https://127.0.0.1:6443/healthz;

proxy_ssl_trusted_certificate /var/lib/kubernetes/ca.pem;

}

}

EOF

sudo mv kubernetes.default.svc.cluster.local \

/etc/nginx/sites-available/kubernetes.default.svc.cluster.local

sudo ln -s /etc/nginx/sites-available/kubernetes.default.svc.cluster.local /etc/nginx/sites-enabled/# start nginx and verify health check

sudo systemctl restart nginx

sudo systemctl enable nginx

kubectl cluster-info --kubeconfig admin.kubeconfig

curl -H "Host: kubernetes.default.svc.cluster.local" -i http://127.0.0.1/healthz

(Optional) 8. Kubectl remote access from local machine

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority='ca.pem' \

--embed-certs=true \

--server=https://172.30.1.40:6443

kubectl config set-credentials admin \

--client-certificate='admin.pem' \

--client-key='admin-key.pem'

kubectl config set-context kubernetes-the-hard-way \

--cluster=kubernetes-the-hard-way \

--user=admin

kubectl config use-context kubernetes-the-hard-way

9. Bootstrapping the Kubernetes Worker Nodes

- The following components will be installed on each node: runc, container, networking plugins, containerd, kubelet, and kube-proxy

# Install the OS dependencies & Disable Swap

sudo apt-get update

sudo apt-get -y install socat conntrack ipset

sudo swapoff -a# Download binaries and move them to desired location

wget -q --show-progress --https-only --timestamping \

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.21.0/crictl-v1.21.0-linux-arm64.tar.gz \

https://github.com/opencontainers/runc/releases/download/v1.1.0/runc.arm64 \

https://github.com/containernetworking/plugins/releases/download/v0.9.1/cni-plugins-linux-arm64-v0.9.1.tgz \

https://github.com/containerd/containerd/releases/download/v1.6.1/containerd-1.6.1-linux-arm64.tar.gz \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/arm64/kubelet

sudo mkdir -p \

/etc/cni/net.d \

/opt/cni/bin \

/var/lib/kubelet \

/var/lib/kube-proxy \

/var/lib/kubernetes \

/var/run/kubernetes

mkdir containerd

tar -xvf crictl-v1.21.0-linux-arm64.tar.gz

tar -xvf containerd-1.6.1-linux-arm64.tar.gz -C containerd

sudo tar -xvf cni-plugins-linux-arm64-v0.9.1.tgz -C /opt/cni/bin/

sudo mv runc.arm64 runc

chmod +x crictl kubectl kube-proxy kubelet runc

sudo mv crictl kubectl kube-proxy kubelet runc /usr/local/bin/

sudo mv containerd/bin/* /bin/

# Configure CNI Networking

- Create the bridge network and loopback network configuration

POD_CIDR=10.200.1.0/24

cat <<EOF | sudo tee /etc/cni/net.d/10-bridge.conf

{

"cniVersion": "0.4.0",

"name": "bridge",

"type": "bridge",

"bridge": "cnio0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"ranges": [

[{"subnet": "${POD_CIDR}"}]

],

"routes": [{"dst": "0.0.0.0/0"}]

}

}

EOF

cat <<EOF | sudo tee /etc/cni/net.d/99-loopback.conf

{

"cniVersion": "0.4.0",

"name": "lo",

"type": "loopback"

}

EOF

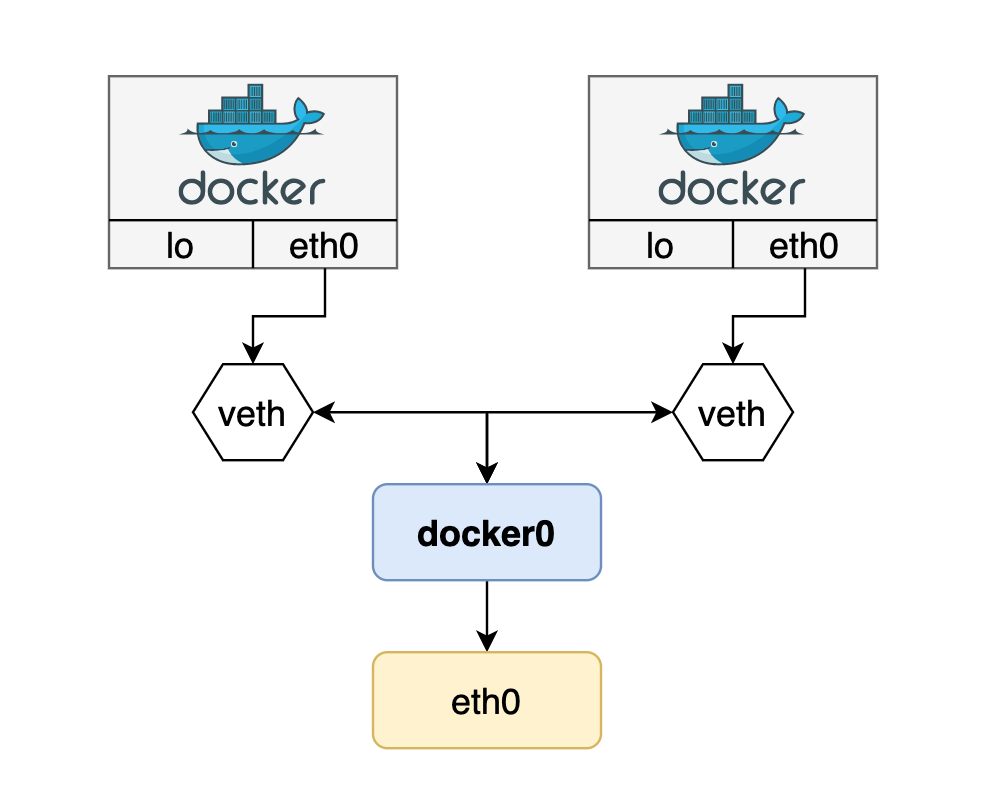

CNI

# Configure Containerd

sudo mkdir -p /etc/containerd/

cat << EOF | sudo tee /etc/containerd/config.toml

[plugins]

[plugins.cri.containerd]

snapshotter = "overlayfs"

[plugins.cri.containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runc"

runtime_root = ""

EOF

# Create the containerd systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=/sbin/modprobe overlay

ExecStart=/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOF

# Start containerd

sudo systemctl daemon-reload

sudo systemctl enable containerd

sudo systemctl start containerd

Kubelet

# Certi to the desired location

sudo cp k8s-worker-1-key.pem k8s-worker-1.pem /var/lib/kubelet/

sudo cp k8s-worker-1.kubeconfig /var/lib/kubelet/kubeconfig# Configure the kubelet

- authorization mode : webhook

POD_CIDR=10.200.0.0/24

cat <<EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

podCIDR: "${POD_CIDR}"

resolvConf: "/run/systemd/resolve/resolv.conf"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/${HOSTNAME}.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/${HOSTNAME}-key.pem"

EOF# Create the kubelet systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start kubelet

sudo systemctl daemon-reload

sudo systemctl enable kubelet

sudo systemctl start kubelet* reboot 후 아래 메시지 없어짐.

"Failed to get the kubelet's cgroup. Kubelet system container metrics may be missing." err="mountpoint for memory not found"Kube-proxy

# Certi to the desired location

sudo mv kube-proxy.kubeconfig /var/lib/kube-proxy/kubeconfig# Configuration & Systemd unit

cat <<EOF | sudo tee /var/lib/kube-proxy/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/var/lib/kube-proxy/kubeconfig"

mode: "iptables"

clusterCIDR: "10.200.0.0/16"

EOF

cat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/var/lib/kube-proxy/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start kubelet, kube-proxy

sudo systemctl daemon-reload

sudo systemctl enable kubelet kube-proxy

sudo systemctl start kubelet kube-proxy

# Verification

ubectl get no --kubeconfig=admin.kubeconfig

\NAME STATUS ROLES AGE VERSION

k8s-worker-1 Ready <none> 40m v1.21.0

k8s-worker-2 Ready <none> 40m v1.21.010. Networking

Pods scheduled to a node receive an IP address from the node's Pod CIDR range. At this point pods can not communicate with other pods running on different nodes due to missing network routes.

We are going to install Flannel to implement the Kubernetes networking model.

Flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml --kubeconfig=admin.kubeconfig

- pod cidr not assigned

kubectl logs -f kube-flannel-ds-x56l5 -n kube-system --kubeconfig=admin.kubeconfig

I0316 05:37:58.801461 1 main.go:205] CLI flags config: {etcdEndpoints:http://127.0.0.1:4001,http://127.0.0.1:2379 etcdPrefix:/coreos.com/network etcdKeyfile: etcdCertfile: etcdCAFile: etcdUsername: etcdPassword: version:false kubeSubnetMgr:true kubeApiUrl: kubeAnnotationPrefix:flannel.alpha.coreos.com kubeConfigFile: iface:[] ifaceRegex:[] ipMasq:true subnetFile:/run/flannel/subnet.env publicIP: publicIPv6: subnetLeaseRenewMargin:60 healthzIP:0.0.0.0 healthzPort:0 iptablesResyncSeconds:5 iptablesForwardRules:true netConfPath:/etc/kube-flannel/net-conf.json setNodeNetworkUnavailable:true}

W0316 05:37:58.801882 1 client_config.go:614] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

I0316 05:37:59.907489 1 kube.go:120] Waiting 10m0s for node controller to sync

I0316 05:37:59.907871 1 kube.go:378] Starting kube subnet manager

I0316 05:38:00.908610 1 kube.go:127] Node controller sync successful

I0316 05:38:00.908732 1 main.go:225] Created subnet manager: Kubernetes Subnet Manager - k8s-worker-1

I0316 05:38:00.908762 1 main.go:228] Installing signal handlers

I0316 05:38:00.909447 1 main.go:454] Found network config - Backend type: vxlan

I0316 05:38:00.909557 1 match.go:189] Determining IP address of default interface

I0316 05:38:00.911032 1 match.go:242] Using interface with name eth0 and address 172.30.1.42

I0316 05:38:00.911156 1 match.go:264] Defaulting external address to interface address (172.30.1.42)

I0316 05:38:00.911399 1 vxlan.go:138] VXLAN config: VNI=1 Port=0 GBP=false Learning=false DirectRouting=false

E0316 05:38:00.912700 1 main.go:317] Error registering network: failed to acquire lease: node "k8s-worker-1" pod cidr not assigned

I0316 05:38:00.913055 1 main.go:434] Stopping shutdownHandler...

W0316 05:38:00.913524 1 reflector.go:436] github.com/flannel-io/flannel/subnet/kube/kube.go:379: watch of *v1.Node ended with: an error on the server ("unable to decode an event from the watch stream: context canceled") has prevented the request from succeeding아래 명령으로 해결

kubectl patch node k8s-worker-1 -p '{"spec":{"podCIDR":"10.200.0.0/24"}}' --kubeconfig=admin.kubeconfig

kubectl patch node k8s-worker-2 -p '{"spec":{"podCIDR":"10.200.1.0/24"}}' --kubeconfig=admin.kubeconfig

10. 클러스터 구축 결과 및 테스트

워커노드 확인

kubectl get no --kubeconfig admin.kubeconfig

NAME STATUS ROLES AGE VERSION

k8s-worker-1 Ready <none> 110m v1.21.0

k8s-worker-2 Ready <none> 53m v1.21.0Flannel 배포 확인

kubectl get po -n kube-system -o wide --kubeconfig admin.kubeconfig -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel-ds-hbk2w 1/1 Running 0 3m18s 172.30.1.43 k8s-worker-2 <none> <none>

kube-flannel-ds-kmx9k 1/1 Running 0 3m18s 172.30.1.42 k8s-worker-1 <none> <none>Nginx 배포 및 확인

cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

run: nginx

replicas: 2

template:

metadata:

labels:

run: nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

EOF

deployment.apps/nginx createdkubectl get po -o wide --kubeconfig admin.kubeconfig -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-7bddd8c596-6x7gn 1/1 Running 0 44s 10.200.0.10 k8s-worker-1 <none> <none>

nginx-7bddd8c596-vptkl 1/1 Running 0 44s 10.200.1.2 k8s-worker-2 <none> <none>

kubectl run curl-tester --image=nginx --kubeconfig admin.kubeconfig

kubectl exec -it curl-tester --kubeconfig admin.kubeconfig -- curl http://10.200.1.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

kubectl exec -it curl-tester --kubeconfig admin.kubeconfig -- curl http://10.200.0.10

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>11. Deploying the DNS Cluster Add-on

DNS add-on provides DNS based service discovery, backed by CoreDNS, to applications running inside the Kubernetes cluster.

# Deploy the coredns cluster add-on

kubectl apply -f https://storage.googleapis.com/kubernetes-the-hard-way/coredns-1.8.yamlkubectl get svc -n kube-system --kubeconfig admin.kubeconfig

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.32.0.10 <none> 53/UDP,53/TCP,9153/TCP 44m

kubectl get svc --kubeconfig admin.kubeconfig

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.32.0.1 <none> 443/TCP 6h19m

nginx ClusterIP 10.32.0.37 <none> 80/TCP 40m

kubectl get po -n kube-system --kubeconfig admin.kubeconfig -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-8494f9c688-658c2 1/1 Running 0 69m 10.200.0.12 k8s-worker-1 <none> <none>

coredns-8494f9c688-k8lql 1/1 Running 0 69m 10.200.1.4 k8s-worker-2 <none> <none># Verification

kubectl exec -it busybox --kubeconfig admin.kubeconfig -- nslookup nginx

Server: 10.32.0.10

Address: 10.32.0.10:53

Name: nginx.default.svc.cluster.local

Address: 10.32.0.37kubectl exec -it curl-tester --kubeconfig admin.kubeconfig -- curl http://nginx.default.svc.cluster.local

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 디버깅

kubectl exec -it busybox --kubeconfig admin.kubeconfig -- cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.32.0.10

options ndots:5

E0316 08:14:40.416650 23982 v3.go:79] EOF[INFO] 10.200.0.11:48289 - 37888 "A IN kubernetes.default.svc.cluster.local. udp 54 false 512" NOERROR qr,aa,rd 106 0.000881653s

[INFO] 10.200.0.11:48289 - 37888 "A IN kubernetes.svc.cluster.local. udp 46 false 512" NXDOMAIN qr,aa,rd 139 0.001551175s

[INFO] 10.200.0.11:48289 - 37888 "AAAA IN kubernetes.default.svc.cluster.local. udp 54 false 512" NOERROR qr,aa,rd 147 0.000719937s

[INFO] 10.200.0.11:48289 - 37888 "AAAA IN kubernetes.svc.cluster.local. udp 46 false 512" NXDOMAIN qr,aa,rd 139 0.001028995s

[INFO] 10.200.0.11:48289 - 37888 "A IN kubernetes.cluster.local. udp 42 false 512" NXDOMAIN qr,aa,rd 135 0.001927212s

[INFO] 10.200.0.11:48289 - 37888 "AAAA IN kubernetes.cluster.local. udp 42 false 512" NXDOMAIN qr,aa,rd 135 0.00215127s

kubectl edit -n kube-system configmaps coredns --kubeconfig admin.kubeconfig

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

cache 30

loop

reload

loadbalance

log

}간단하게 Corefile 구성을 살펴보자.

- errors : 에러가 발생하면 stdout으로 보낸다.

- health : http://localhost:8080/health를 통해 CoreDNS 상태를 확인할 수 있다.

- ready : 준비 요청이 되어 있는지 확인하기 위해 포트 http://localhost:8181/ready로 HTTP 요청을 보니면 200 OK가 반환된다.

- kubenetes : 쿠버네티스의 Service 도메인과 POD IP 기반으로 DNS 쿼리를 응답한다. ttl 설정으로 타임아웃을 제어할 수 있다.

- pods 옵션은 POD IP를 기반으로 DNS 질의를 제어하기 위한 옵션이다. 기본값은 disabled이며, insecure값은 kube-dns 하위 호환성을 위해서 사용한다.

- pods disabled옵션을 설정하면 POD IP 기반 DNS 질의가 불가능하다. 예를 들어 testbed 네임스페이스에 있는 POD IP가 10.244.2.16라고 한다면, 10-244-2-16.testbed.pod.cluster.local질의에 A 레코드를 반환하지 않게 된다.

- pods insecure 옵션은 같은 네임스페이스에 일치하는 POD IP가 있는 경우에만 A 레코드를 반환한다고 되어 있다. 하지만 간단하게 테스트 해보기 위해 다른 네임스페이스 상에 POD를 만들고 서로 호출했을 때 계속 통신이 되었다. 제대로 이해를 못했거나 테스트 방식이 잘못된 것인지 잘 모르겠다. ㅠㅠ

- prometheus : 지정한 포트(:9153)로 프로메테우스 포맷의 메트릭 정보를 확인할 수 있다. 위에서 다룬 health의 :8080포트나 ready 옵션의 :8181포트를 포함해서 CoreDNS로 HTTP 요청을 보내려면 CoreDNS Service 오브젝트 설정에 :9153, :8080, :8181 포트를 바인딩을 설정해야 한다.

12. Pod to Internet

Corefile에 forward 추가.

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

cache 30

loop

reload

loadbalance

log

forward . 8.8.8.8

}구글에 핑을 날려 확인

kubectl exec -it busybox --kubeconfig admin.kubeconfig -- ping www.google.com

PING www.google.com (172.217.175.36): 56 data bytes

64 bytes from 172.217.175.36: seq=0 ttl=113 time=31.225 ms

64 bytes from 172.217.175.36: seq=1 ttl=113 time=31.720 ms

64 bytes from 172.217.175.36: seq=2 ttl=113 time=32.036 ms

64 bytes from 172.217.175.36: seq=3 ttl=113 time=32.769 ms

64 bytes from 172.217.175.36: seq=4 ttl=113 time=31.890 mskubectl exec -it busybox --kubeconfig admin.kubeconfig -- nslookup nginx

Server: 10.32.0.10

Address: 10.32.0.10:53

Name: nginx.default.svc.cluster.local

Address: 10.32.0.37

디버깅

[INFO] Reloading complete

[INFO] 127.0.0.1:51732 - 10283 "HINFO IN 790223070724377814.6189194672555963484. udp 56 false 512" NXDOMAIN qr,rd,ra,ad 131 0.033668927s

[INFO] 10.200.0.11:44705 - 5 "AAAA IN www.google.com. udp 32 false 512" NOERROR qr,rd,ra 74 0.034105169s

[INFO] 10.200.0.11:44224 - 6 "A IN www.google.com. udp 32 false 512" NOERROR qr,rd,ra 62 0.034133246s

[INFO] 10.200.0.11:41908 - 6656 "A IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.000872899s

[INFO] 10.200.0.11:41908 - 6656 "A IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.001095134s

[INFO] 10.200.0.11:41908 - 6656 "AAAA IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 142 0.001334348s

[INFO] 10.200.0.11:41908 - 6656 "AAAA IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.001338932s

[INFO] 10.200.0.11:41908 - 6656 "A IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 96 0.002403233s

[INFO] 10.200.0.11:41908 - 6656 "AAAA IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.00264s

[INFO] 10.200.0.11:41908 - 6656 "A IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 96 0.000500251s

[INFO] 10.200.0.11:41908 - 6656 "AAAA IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.000283484s

[INFO] 10.200.0.11:41908 - 6656 "AAAA IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 142 0.000317755s

[INFO] 10.200.0.11:41908 - 6656 "A IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.000322389s

[INFO] 10.200.0.11:41908 - 6656 "AAAA IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.00025812s

[INFO] 10.200.0.11:41908 - 6656 "A IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.000913992s

[INFO] 10.200.0.11:56880 - 7936 "A IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 96 0.000680195s

[INFO] 10.200.0.11:56880 - 7936 "AAAA IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 142 0.000718059s

[INFO] 10.200.0.11:56880 - 7936 "A IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.001300027s

[INFO] 10.200.0.11:56880 - 7936 "AAAA IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.002253237s

[INFO] 10.200.0.11:56880 - 7936 "AAAA IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.001340651s

[INFO] 10.200.0.11:56880 - 7936 "A IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.000766964s

[INFO] 10.200.0.11:56880 - 7936 "A IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 96 0.000423221s

[INFO] 10.200.0.11:56880 - 7936 "A IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.000411711s

[INFO] 10.200.0.11:56880 - 7936 "A IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.000823578s

[INFO] 10.200.0.11:56880 - 7936 "AAAA IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 142 0.001064718s

[INFO] 10.200.0.11:56880 - 7936 "AAAA IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.001470023s

[INFO] 10.200.0.11:56880 - 7936 "AAAA IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.001802204s[INFO] Reloading

W0316 08:37:06.872387 1 warnings.go:70] discovery.k8s.io/v1beta1 EndpointSlice is deprecated in v1.21+, unavailable in v1.25+; use discovery.k8s.io/v1 EndpointSlice

W0316 08:37:06.878515 1 warnings.go:70] discovery.k8s.io/v1beta1 EndpointSlice is deprecated in v1.21+, unavailable in v1.25+; use discovery.k8s.io/v1 EndpointSlice

[INFO] plugin/reload: Running configuration MD5 = 4507d64c02fd8d12322b2944d3f2f975

[INFO] Reloading complete

[INFO] 127.0.0.1:37902 - 16284 "HINFO IN 968904221266650254.5526836193363943015. udp 56 false 512" NXDOMAIN qr,rd,ra,ad 131 0.034053296s

[INFO] 10.200.0.0:56308 - 7 "A IN www.google.com. udp 32 false 512" NOERROR qr,rd,ra 62 0.03481522s

[INFO] 10.200.0.0:60813 - 9216 "A IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 96 0.001246092s

[INFO] 10.200.0.0:60813 - 9216 "A IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.001978799s

[INFO] 10.200.0.0:60813 - 9216 "A IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.002515881s

[INFO] 10.200.0.0:60813 - 9216 "AAAA IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.003136974s

[INFO] 10.200.0.0:60813 - 9216 "AAAA IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.004195045s

[INFO] 10.200.0.0:60813 - 9216 "AAAA IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 142 0.004489419s

[INFO] 10.200.0.0:60813 - 9216 "A IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.000557082s

[INFO] 10.200.0.0:60813 - 9216 "A IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.000709165s

[INFO] 10.200.0.0:60813 - 9216 "AAAA IN nginx.svc.cluster.local. udp 41 false 512" NXDOMAIN qr,aa,rd 134 0.002432965s

[INFO] 10.200.0.0:60813 - 9216 "AAAA IN nginx.cluster.local. udp 37 false 512" NXDOMAIN qr,aa,rd 130 0.002911193s

[INFO] 10.200.0.0:60813 - 9216 "AAAA IN nginx.default.svc.cluster.local. udp 49 false 512" NOERROR qr,aa,rd 142 0.003832859s

W0316 08:45:26.938383 1 warnings.go:70] discovery.k8s.io/v1beta1 EndpointSlice is deprecated in v1.21+, unavailable in v1.25+; use discovery.k8s.io/v1 EndpointSlice- https://www.daveevans.us/posts/kubernetes-on-raspberry-pi-the-hard-way-part-1/

Kubernetes on Raspberry Pi, The Hard Way - Part 1

Starting a Kubernetes project for Raspberry Pi.

www.daveevans.us

- https://www.daveevans.us/posts/kubernetes-on-raspberry-pi-the-hard-way-part-1/

- https://github.com/cloudfoundry-incubator/kubo-deployment/issues/346

- https://phoenixnap.com/kb/enable-ssh-raspberry-pi

- https://github.com/Nek0trkstr/Kubernetes-The-Hard-Way-Raspberry-Pi/tree/master/00-before_you_begin

- https://github.com/kelseyhightower/kubernetes-the-hard-way/tree/master/docs

- k8s 인증 : https://coffeewhale.com/kubernetes/authentication/x509/2020/05/02/auth01/

- coredns : https://jonnung.dev/kubernetes/2020/05/11/kubernetes-dns-about-coredns/

'클라우드 컴퓨팅 > 쿠버네티스' 카테고리의 다른 글

| Build k8s Cluster on Raspberry Pi 4B(Ubuntu) through Kubeadm (0) | 2022.01.18 |

|---|---|

| Kubernetes Networking (0) | 2021.12.05 |

| Docker Network (0) | 2021.12.05 |

| Kubernetes Storage (0) | 2021.11.22 |

| Kubernetes Security - Authentication/Authorization (0) | 2021.11.10 |