Kubernetes Networking을 담당하는 컴포넌트는 cni plugin(weavnet, flannel 등), kube-proxy, coredns가 있다.

먼저 CNI 플러그인에 대해서 살펴보도록 하자.

CNI 플러그인

기본적으로 쿠버네티스는 kubenet 이라는 기본적인 네트워크 플러그인을 제공하지만 크로스 노드 네트워킹이나 네트워크 정책 설정과 같은 고급 기능은 구현되어 있지 않다. 따라서 Pod 네트워킹 인터페이스로 CNI 스펙을 준수하는(Kubernete Networking Model 구현하는) 네트워크 플러그인을 사용해야한다.

Kubernetes Networking Model

- Every Pod should have an IP Address

- Every Pod should be able to communicate with every other POD in the same node.

- Every Pod should be able to communicate with every other POD on other nodes without NAT.CNI 플러그인의 주요 기능

1. Container Runtime must create network namespace

2. Identify network the container must attach to

3. Container Runtime to invoke Network Plugin(bridge) when container is Added

- create veth pair

- attach veth pair

- assign ip address

- bring up interface

4. Container Runtime to invoke Network Plugin(bridge) when container is Deleted

- delete veth pair

5. JSON format of the Network Configuration

CNI 플러그인의 주요 책임

- Must support arguments ADD/DEL/CHECK

- Must support parameters container id, network ns etc..

- Must manage IP Address assignment to PODS

- Must Retrun results in a specific format

참고. CNI 설정

kubelet 실행시 CNI 설정을 함께한다.

> ps -aux | grep kubelet

# kubelet.service

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--cni-bin-dir=/opt/cni/bin \\

--cni-conf-dir=/etc/cni/net.d \\

--register-node=true \\

--v=2

참고. CNI IPAM

ip 할당에 대한 관리(DHCP, host-local, ...)

cat /etc/cni/net.d/net-script.conf

{

"cniVersion": "0.2.0",

"name": "mynet",

"type": “net-script",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"subnet": "10.244.0.0/16",

"routes": [

{ "dst": "0.0.0.0/0" }

]

}

}# cat /etc/cni/net.d/10-bridge.conf

{

"cniVersion": "0.2.0",

"name": "mynet",

"type": "bridge",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"subnet": "10.22.0.0/16",

"routes": [

{ "dst": "0.0.0.0/0" }

]

}

}Pod Networking with CNI 플러그인(flannel)

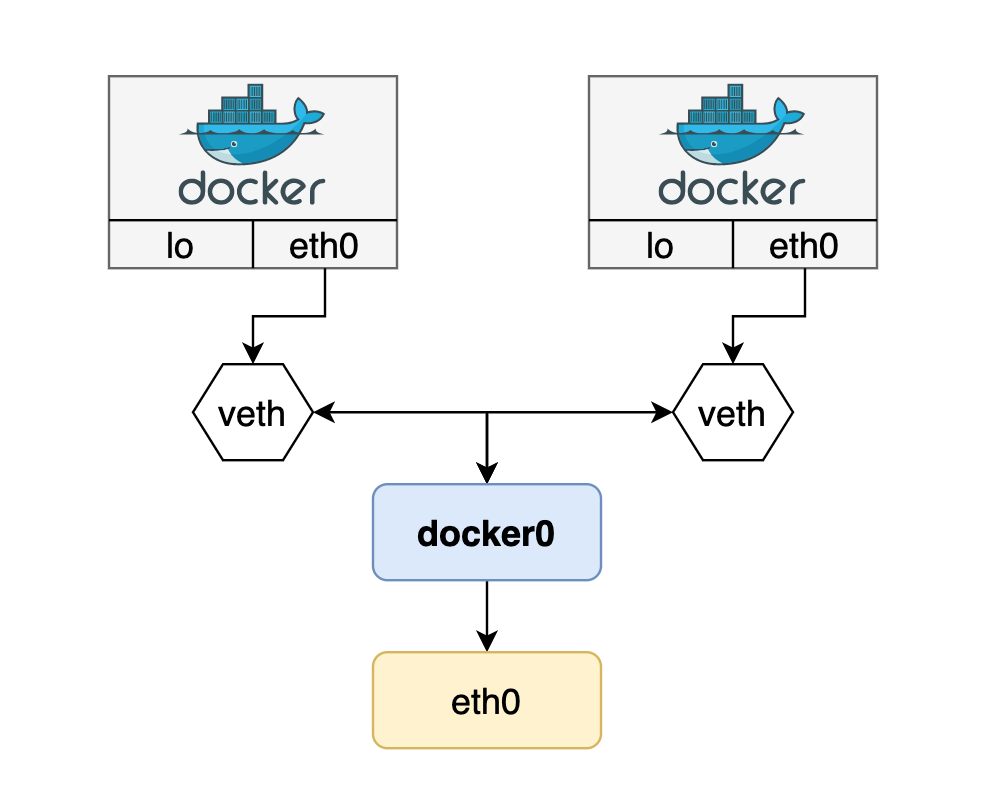

Pod 내 컨테이너 들은 가상 네트워크 인터페이스(veth)를 통해 서로 통신할 수 있고, 고유한 IP를 갖게된다. 각 Pod는 위에서 설명한 CNI로 구성된 네트워크 인터페이스를 통하여 고유한 IP 주소로 서로 통신할 수 있다. 추가로 각기 다른 노드에 존재하는 Pod들은 서로 통신하기 위해 라우터를 거처야 한다.

Service Networking with Kube-proxy

service는 selector를 통해 전달받을 트래픽을 특정 Pod로 전달한다. Pod 네트워크와 동일하게 가상 IP 주소를 갖지만 ip addr, router로 조회할 수 없는 특징을 가지고 있다. 대신 iptable 명령을 통해 NAT 테이블을 조회해보면 관련 설정이 있음을 확인할 수 있다.

쿠버네티스는 Service Networking을 위해 Kube-proxy를 이용한다. IP 네트워크(Layer 3)는 기본적으로 자신의 호스트에서 목적지를 찾지 못하면 상위 게이트웨이로 패킷을 전달하고, 라우터에 트래픽이 도달하기전에 Kube-proxy를 통해 최종 목적지를 찾는 경우가 있다.

Kube-proxy는 현재(2021.11.28) iptables 모드가 기본 프록시 모드로 설정되어 있어 있고, 쿠버네티스에는 데몬셋으로 배포되기 때문에 모든 노드에 존재한다. 이때 kube-proxy는 직접 proxy 역할을 수행하지 않고, 그 역할을 전부 netfilter(service ip 발견 & Pod로 전달)에게 맡긴다. kube-proxy는 단순히 iptables를 통해 netfilter의 규칙을 수정한다.

--proxy-mode ProxyMode

사용할 프록시 모드: 'userspace' (이전) or 'iptables' (빠름) or 'ipvs' or 'kernelspace' (윈도우). 공백인 경우 가장 잘 사용할 수 있는 프록시(현재는 iptables)를 사용한다. iptables 프록시를 선택했지만, 시스템의 커널 또는 iptables 버전이 맞지 않으면, 항상 userspace 프록시로 변경된다.

참고. iptables, netfilter

- iptables : 유저 스페이스에 존재하는 인터페이스로 패킷 흐름을 제어. netfilter를 이용하여 규칙을 지정하여 패킷을 포워딩한다.

- netfilter : 커널 스페이스에 위치하여 모든 패킷의 생명주기를 관찰하고, 규칙에 매칭되는 패킷이 발생되면 정의된 액션을 수행한다.

sudo iptables -S or -L -t nat 명령을 통해 노드에 설정되어 있는 NAT 테이블을 조회할 수 있다.

# netfilter의 체인룰

KUBE-SVC-XXX

KUBE-SERVICES

KUBE-SEP-XXX

KUBE-POSTROUTING

KUBE-NODEPORTS

KUBE-MARK-DROP

KUBE-MARK-MASQ

DOCKER

POSTROUTING

PREROUTING

OUTPUT

KUBE-PROXY-CANARY

KUBE-KUBELET-CANRY

등# 특정 서비스의 체인룰 조회

iptables –L –t net | grep db-service

KUBE-SVC-XA5OGUC7YRHOS3PU tcp -- anywhere 10.103.132.104 /* default/db-service: cluster IP */ tcp dpt:3306

DNAT tcp -- anywhere anywhere /* default/db-service: */ tcp to:10.244.1.2:3306

KUBE-SEP-JBWCWHHQM57V2WN7 all -- anywhere anywhere /* default/db-service: */

kube-proxy는 마스터 노드의 API server에서 정보를 수신하여 이러한 체인 룰들을 추가하거나 삭제한다. 이렇게 지속적으로 iptables를 업데이트하여 netfilter 규칙을 최신화하며 Service 네트워크를 관리하는 것이다.

Ingress

Ingress는 리버스 프록시를 통해 클러스터 내부 Service로 패킷을 포워딩 시키는 방법을 명시한다. 대표적으로 많이 사용하는 nginx ingress controller는 ingress 리소스를 읽어서 그에 맞는 리버스 프록시를 구성한다.

Ingress 특징

- using a single externally accessible URL that you can configure to route to different services Based on URL path and implementing SSL security as well

- layer 7 load balancer

- Ingress controller(Deploy) required - NOT deployed by default

Inginx Ingress Controller(Deployment) 예시

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

spec:

replicas: 1

selector:

matchLabels:

name: nginx-ingress

template:

metadata:

labels:

name: nginx-ingress

spec:

containers:

- name: nginx-ginress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0

args:

- /nginx-ingress-controler

- --configmap=$(POD_NAMESPACE)/nginx-configuration

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443Ingress Resources(Configure)

Path types

Each path in an Ingress is required to have a corresponding path type. Paths that do not include an explicit pathType will fail validation. There are three supported path types:

- ImplementationSpecific: With this path type, matching is up to the IngressClass. Implementations can treat this as a separate pathType or treat it identically to Prefix or Exact path types.

- Exact: Matches the URL path exactly and with case sensitivity.

- Prefix: Matches based on a URL path prefix split by /. Matching is case sensitive and done on a path element by element basis. A path element refers to the list of labels in the path split by the / separator. A request is a match for path p if every p is an element-wise prefix of p of the request path.

Note: If the last element of the path is a substring of the last element in request path, it is not a match (for example: /foo/bar matches/foo/bar/baz, but does not match /foo/barbaz).

Rule example 1.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-wear

spec:

backend:

serviceName: wear-service

servicePort: 80

Rule example 2(splitting traffic by URL).

- no host is specified. The rule applies to all inbound HTTP traffic through the IP address specified.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-wear-watch

spec:

rules:

- http:

paths:

- path: /wear

backend:

serviceName: wear-service

servicePort: 80

- path: /watch

backend:

serviceName: watch-service

servicePort: 80

Rule example 3(spliting by hostname).

- If a host is provided (for example, foo.bar.com), the rules apply to that host

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-wear-watch

spec:

rules:

- host: wear.my-online-store.com

http:

paths:

- backend:

serviceName: wear-service

servicePort: 80

- host: watch.my-online-store.com

http:

paths:

- backend:

serviceName: watch-service

servicePort: 80

참고) rewrite-target

Without the rewrite-target option, this is what would happen:

http://<ingress-service>:<ingress-port>/watch --> http://<watch-service>:<port>/watch

http://<ingress-service>:<ingress-port>/wear --> http://<wear-service>:<port>/wear

참고) replace("/something(/|$)(.*)", "/$2")

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: rewrite

namespace: default

spec:

rules:

- host: rewrite.bar.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /something(/|$)(.*)

Nginx Ingress Service(NodePort) 예시

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

protocol: TCP

name: http

- port: 443

targetPort: 443

protocol: TCP

name: https

selector:

name: nginx-ingressCoreDNS

쿠버네티스 클러스터의 DNS 역할을 수행할 수 있는 유연하고 확장 가능한 DNS 서버이고, 서비스 디스커버리에 사용된다.

FQDN(Fully Qualified Domain Name)

쿠버네티스에서 도메인으로 다양한 네임스페이스의 서비스 혹은 파드와 통신하고자 할 때 사용한다.

사용방법 : {service or host name}.{namespace name}.{svc or pod}.cluster.local

| Hostname | Namespace | Type | Root | IP Address |

| web-service | apps | svc | cluster.local | 10.107.37.188 |

| 10-244-2-5 | default | pod | cluster.local | 10.244.2.5 |

# Service

curl http://web-service.apps.svc.cluster.local

# POD

curl http://10-244-2-5.apps.pod.cluster.local

coreDNS 설정

- /etc/coredns/Corefile

$ cat /etc/coredns/Corefile

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

reload

}coreDNS service

- kube-dns

pi@master:~ $ kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 21d- kubelet config for coreDNS

root@master:/var/lib/kubelet# cat config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: cgroupfs

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s- external DNS server

forwarded to the nameserver specified in the coredns pods and /etc/resolv.conf file is set to use the nameserver from the kubernetes(POD에서 요청한 도메인이 DNS server에서 찾을 수 없는 경우)

pi@master:~ $ cat /etc/resolv.conf

# Generated by resolvconf

nameserver 192.168.123.254

nameserver 8.8.8.8

nameserver fd51:42f8:caae:d92e::1

nameserver 61.41.153.2

nameserver 1.214.68.2

Quiz 1.

1. 네트워크 인터페이스/맥주소 조회

- ifconfig -a : 전체 네트워크 인터페이스 조회

- cat /etc/network/interfaces : 시스템의 네트워크 기본 정보 설정

- ifconfig eth0 : 네트워크 인터페이스/맥주소 조회

- ip link show eth0 : 네트워크 인터페이스/맥주소 조회

- ip a | grep -B2 10.3.116.12 on controlplane

네트워크 인터페이스 : eth0, MAC address(ether) : 02:42:0a:08:0b:06

root@controlplane:~# ip a | grep -B2 10.8.11.6

4890: eth0@if4891: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default

link/ether 02:42:0a:08:0b:06 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.8.11.6/24 brd 10.8.11.255 scope global eth0

worker 노드의 네트워크 인터페이스 on controlplane

- ssh node01 ifconfig eth0 로도 확인 가능!

- arp node01

root@controlplane:~# arp node01

Address HWtype HWaddress Flags Mask Iface

10.8.11.8 ether 02:42:0a:08:0b:07 C eth0

2. What is the interface/bridge created by Docker on this host?

- ifconfig -a : docker0

- ip addr

root@controlplane:~# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:8e:70:63:21 brd ff:ff:ff:ff:ff:ff

3: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/ether ae:8e:7a:c3:ed:cd brd ff:ff:ff:ff:ff:ff

4: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether ee:04:24:06:10:7d brd ff:ff:ff:ff:ff:ff

5: veth0cbf7e57@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue master cni0 state UP mode DEFAULT group default

link/ether 4e:9d:38:c6:36:3f brd ff:ff:ff:ff:ff:ff link-netnsid 2

6: vethe11f72d7@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc noqueue master cni0 state UP mode DEFAULT group default

link/ether 26:8a:96:40:ad:fc brd ff:ff:ff:ff:ff:ff link-netnsid 3

4890: eth0@if4891: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:0a:08:0b:06 brd ff:ff:ff:ff:ff:ff link-netnsid 0

4892: eth1@if4893: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:ac:11:00:39 brd ff:ff:ff:ff:ff:ff link-netnsid 1what is the state of the interface docker0

- state DOWN

root@controlplane:~# ip link show docker0

2: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:8e:70:63:21 brd ff:ff:ff:ff:ff:f3. What is the default gateway

- ip route show default

root@controlplane:~# ip route show default

default via 172.17.0.1 dev eth14. What is the port the kube-scheduler is listening on in the controlplane node?

- netstat -nplt | grep scheduler

- netstat -natulp | grep kube-scheduler

root@controlplane:~# netstat -nplt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:8080 0.0.0.0:* LISTEN 735/ttyd

tcp 0 0 127.0.0.1:10257 0.0.0.0:* LISTEN 3716/kube-controlle

tcp 0 0 127.0.0.1:10259 0.0.0.0:* LISTEN 3776/kube-scheduler

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 636/systemd-resolve

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 758/sshd

tcp 0 0 127.0.0.1:36697 0.0.0.0:* LISTEN 4974/kubelet

tcp 0 0 127.0.0.11:45217 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 4974/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 6178/kube-proxy

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 10.8.11.6:2379 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 10.8.11.6:2380 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 127.0.0.1:2381 0.0.0.0:* LISTEN 3893/etcd

tcp6 0 0 :::10256 :::* LISTEN 6178/kube-proxy

tcp6 0 0 :::22 :::* LISTEN 758/sshd

tcp6 0 0 :::8888 :::* LISTEN 5342/kubectl

tcp6 0 0 :::10250 :::* LISTEN 4974/kubelet

tcp6 0 0 :::6443 :::* LISTEN 4003/kube-apiserver

5. Notice that ETCD is listening on two ports. Which of these have more client connections established?

- netstat -natulp | grep etcd | grep LISTEN

- netstat -anp | grep etcd | grep 2379 | wc -l

root@controlplane:~# netstat -anp | grep etcd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 10.8.11.6:2379 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 10.8.11.6:2380 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 127.0.0.1:2381 0.0.0.0:* LISTEN 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35234 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:33034 127.0.0.1:2379 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34830 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35498 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35394 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35458 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35546 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35378 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35418 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35450 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35130 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:33034 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35148 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35250 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34916 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34812 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34758 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35232 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34846 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35060 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35566 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34872 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35368 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35442 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35356 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34724 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34972 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34794 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34618 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35528 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35208 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34862 ESTABLISHED 3893/etcd

tcp 0 0 10.8.11.6:2379 10.8.11.6:37174 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35468 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35310 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35220 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35254 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35472 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35040 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34744 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35506 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35036 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34908 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35512 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34638 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35228 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35300 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35106 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35170 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35486 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35434 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35284 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34628 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35196 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35412 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35336 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34964 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35184 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35422 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34942 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34924 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35540 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35294 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35246 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35428 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34822 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35384 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35108 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34746 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35072 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34752 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35086 ESTABLISHED 3893/etcd

tcp 0 0 10.8.11.6:37174 10.8.11.6:2379 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35714 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35584 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:34730 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35324 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35268 ESTABLISHED 3893/etcd

tcp 0 0 127.0.0.1:2379 127.0.0.1:35706 ESTABLISHED 3893/etcd

Quiz 2.

1. Inspect the kubelet service and identify the network plugin configured for Kubernetes.

- ps -aux | grep kubelet | grep --color network-plugin=

root@controlplane:~# ps -aux | grep kubelet | grep --color network-plugin=

root 4819 0.0 0.0 4003604 104604 ? Ssl 04:51 1:12 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.2

2. What is the path configured with all binaries of CNI supported plugins?

- The CNI binaries are located under /opt/cni/bin by default.

Identify which of the below plugins is not available in the list of available CNI plugins on this host?

- Run the command: ls /opt/cni/bin and identify the one not present at that directory.

$ls /opt/cni/bin/

bandwidth bridge dhcp firewall flannel host-device host-local ipvlan loopback macvlan portmap ptp sbr static tuning vlan3. What is the CNI plugin configured to be used on this kubernetes cluster?

- ls /etc/cni/net.d/

- 포드를 조회해보고 이름을 통해 알 수도 있음.

controlplane $ cat /etc/cni/net.d/10-weave.conflist

{

"cniVersion": "0.3.0",

"name": "weave",

"plugins": [

{

"name": "weave",

"type": "weave-net",

"hairpinMode": true

},

{

"type": "portmap",

"capabilities": {"portMappings": true},

"snat": true

}

]

}4. What binary executable file will be run by kubelet after a container and its associated namespace are created.

- Look at the type field in file /etc/cni/net.d/10-flannel.conflist. (flannel)

root@controlplane:~# cat /etc/cni/net.d/10-flannel.conflist

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

참고) cni plugin 설치 안되었을시 Pod 에러(No Network configured)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 46s default-scheduler Successfully assigned default/app to node01

Warning FailedCreatePodSandBox 44s kubelet, node01 Failed to create pod sandbox: rpc error: code = Unknown desc = [failed to set up sandbox container "6b3b648ea4a547e46b96ca4d23841e9acc0edc0d43f0e879af8f45ebb498c74e" network for pod "app": networkPlugin cni failed to set up pod "app_default" network: unable to allocate IP address: Post "http://127.0.0.1:6784/ip/6b3b648ea4a547e46b96ca4d23841e9acc0edc0d43f0e879af8f45ebb498c74e": dial tcp 127.0.0.1:6784: connect: connection refused, failed to clean up sandbox container "6b3b648ea4a547e46b96ca4d23841e9acc0edc0d43f0e879af8f45ebb498c74e" network for pod "app": networkPlugin cni failed to teardown pod "app_default" network: Delete "http://127.0.0.1:6784/ip/6b3b648ea4a547e46b96ca4d23841e9acc0edc0d43f0e879af8f45ebb498c74e": dial tcp 127.0.0.1:6784: connect: connection refused]

5. Identify the name of the bridge network/interface created by weave on each node

- ifconfig : weave

- ip link : weave

controlplane $ ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 02:42:ac:11:00:10 brd ff:ff:ff:ff:ff:ff

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:da:b0:4f:0c brd ff:ff:ff:ff:ff:ff

6: datapath: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/ether fe:f9:14:7b:9e:e8 brd ff:ff:ff:ff:ff:ff

8: weave: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 06:a9:f6:0f:2d:d6 brd ff:ff:ff:ff:ff:ff

10: vethwe-datapath@vethwe-bridge: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master datapath state UP mode DEFAULT group default

link/ether fa:69:cd:fe:bd:65 brd ff:ff:ff:ff:ff:ff

11: vethwe-bridge@vethwe-datapath: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue master weave state UP mode DEFAULT group default

link/ether ea:09:2e:7d:f1:74 brd ff:ff:ff:ff:ff:ff

12: vxlan-6784: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue master datapath state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 0a:8f:c0:a8:02:9f brd ff:ff:ff:ff:ff:ff

6. What is the POD IP address range configured by weave?

- ip addr show weave

controlplane $ ip addr show weave

8: weave: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1376 qdisc noqueue state UP group default qlen 1000

link/ether 06:a9:f6:0f:2d:d6 brd ff:ff:ff:ff:ff:ff

inet 10.32.0.1/12 brd 10.47.255.255 scope global weave

valid_lft forever preferred_lft forever

inet6 fe80::4a9:f6ff:fe0f:2dd6/64 scope link

valid_lft forever preferred_lft forever

7. What is the default gateway configured on the PODs scheduled on node03?

- kubectl run busybox --imag=busybox --command sleep 1000 --dry-run=client -o yaml > pod.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: busybox

name: busybox

spec:

nodeName: node03 # node3!

containers:

- command:

- sleep

- "1000"

image: busybox

name: busybox- kubectl exec -it busybox -- sh

- ip route : 10.38.0.0

# ip r

default via 10.38.0.0 dev eth0

10.32.0.0/12 dev eth0 scope link src 10.38.0.1- ssh node03 ip route : 10.46.0.0

controlplane $ ssh node03 ip route

default via 172.17.0.1 dev ens3

10.32.0.0/12 dev weave proto kernel scope link src 10.46.0.0

172.17.0.0/16 dev ens3 proto kernel scope link src 172.17.0.22

172.18.0.0/24 dev docker0 proto kernel scope link src 172.18.0.1 linkdown

Quiz 3.

1. What network range are the nodes in the cluster part of?

- ip addr

controlplane $ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 02:42:ac:11:00:0f brd ff:ff:ff:ff:ff:ff

inet 172.17.0.15/16 brd 172.17.255.255 scope global ens3

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe11:f/64 scope link

valid_lft forever preferred_lft forever2. What is the range of IP addresses configured for PODs on this cluster?

- kubectl logs weave-net-xxx -c weave -n kube-system | grep ipalloc-range

- weave configuration으로도 확인가능.

controlplane $ kubectl logs weave-net-4p55r -c weave -n kube-system | grep ipalloc-range

INFO: 2021/07/17 06:50:50.095797 Command line options: map[conn-limit:200 datapath:datapath db-prefix:/weavedb/weave-net docker-api: expect-npc:true http-addr:127.0.0.1:6784 ipalloc-init:consensus=1 ipalloc-range:10.32.0.0/12 metrics-addr:0.0.0.0:6782 name:06:a9:f6:0f:2d:d6 nickname:controlplane no-dns:true no-masq-local:true port:6783]

3. What is the IP Range configured for the services within the cluster?

- cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep cluster-ip-range

controlplane $ cat /etc/kubernetes/manifests/kube-apiserver.yaml | grep cluster-ip-range

- --service-cluster-ip-range=10.96.0.0/12

4. What type of proxy is the kube-proxy configured to use?

- kubectl logs kube-proxy-xxx -n kube-system

- assuming iptables proxy

controlplane $ k logs kube-proxy-fsn4s -n kube-system

I0717 06:51:30.922706 1 node.go:136] Successfully retrieved node IP: 172.17.0.17

I0717 06:51:30.922791 1 server_others.go:111] kube-proxy node IP is an IPv4 address (172.17.0.17), assume IPv4 operation

W0717 06:51:30.977974 1 server_others.go:579] Unknown proxy mode "", assuming iptables proxy

I0717 06:51:30.978240 1 server_others.go:186] Using iptables Proxier.

I0717 06:51:30.978587 1 server.go:650] Version: v1.19.0

I0717 06:51:30.979181 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0717 06:51:30.979710 1 config.go:315] Starting service config controller

I0717 06:51:30.979723 1 shared_informer.go:240] Waiting for caches to sync for service config

I0717 06:51:30.979739 1 config.go:224] Starting endpoint slice config controller

I0717 06:51:30.979742 1 shared_informer.go:240] Waiting for caches to sync for endpoint slice config

I0717 06:51:31.079918 1 shared_informer.go:247] Caches are synced for service config

I0717 06:51:31.079925 1 shared_informer.go:247] Caches are synced for endpoint slice config

참고) kube-proxy as daemonset

kubectl -n kube-system get ds 을 통해 kube-proxy가 데몬셋이라는 것을 알 수 있다. 따라서 kube-proxy는 모든 노드에 걸쳐 실행되고 있다.

pi@master:~ $ kubectl -n kube-system get ds

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-proxy 4 4 4 4 4 kubernetes.io/os=linux 21d

weave-net 4 4 4 4 4 <none> 21d

Quiz 4.

1. What is the IP of the CoreDNS server that should be configured on PODs to resolve services?

- kube-dns

controlplane $ k get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 60m

2. Where is the configuration file located for configuring the CoreDNS service?

- Deployment configuration : /etc/coredns/Corefile

controlplane $ kubectl -n kube-system describe deployments.apps coredns | grep -A2 Args | grep Corefile

/etc/coredns/Corefile

The Corefile is passed in to the CoreDNS POD by a ConfigMap object : mount 정보 확인!

pi@master:~ $ kubectl describe deploy coredns -n kube-system

Name: coredns

Namespace: kube-system

CreationTimestamp: Sat, 26 Jun 2021 18:21:35 +0900

Labels: k8s-app=kube-dns

Annotations: deployment.kubernetes.io/revision: 1

Selector: k8s-app=kube-dns

Replicas: 2 desired | 2 updated | 2 total | 0 available | 2 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 1 max unavailable, 25% max surge

Pod Template:

Labels: k8s-app=kube-dns

Service Account: coredns

Containers:

coredns:

Image: k8s.gcr.io/coredns/coredns:v1.8.0

Ports: 53/UDP, 53/TCP, 9153/TCP

Host Ports: 0/UDP, 0/TCP, 0/TCP

Args:

-conf

/etc/coredns/Corefile

Limits:

memory: 170Mi

Requests:

cpu: 100m

memory: 70Mi

Liveness: http-get http://:8080/health delay=60s timeout=5s period=10s #success=1 #failure=5

Readiness: http-get http://:8181/ready delay=0s timeout=1s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/etc/coredns from config-volume (ro)

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: coredns

Optional: false

Priority Class Name: system-cluster-critical

3. From the hr pod nslookup the mysql service and redirect the output to a file /root/CKA/nslookup.out

- nslookup mysql.payroll

kubectl exec -it hr -- nslookup mysql.payroll > /root/CKA/nslookup.out

Server: 10.96.0.10

Address: 10.96.0.10

Name: mysql.payroll.svc.cluster.local

Address: 10.111.253.233-> nslookup을 통해 fqdn 정보와 address를 알 수 있음.

참고) 서비스의 Selector는 Pod의 라벨 내용을 참고한다. kubectl get po xx --show-labels

Quiz 5.

1. 네임스페이스를 지정하여 ingress resource를 추가할 수 있다.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: critical-space

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /pay

backend:

serviceName: pay-service

servicePort: 8282

2. deploy ingress controller

2-0. namespace

- kubectl create namespace(ns) ingress-space

2-1. configmap

- kubectl create configmap(cm) nginx-configuration --namespace ingress-space

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configuration

namespace: ingress-space2-2. service account

- kubectl create serviceaccount(sa) ingress-serviceaccount --namespace ingress-space

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-serviceaccount

namespace: ingress-space2.3. Role과 RoleBinding은 자동 생성

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

creationTimestamp: "2021-07-25T07:23:17Z"

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: ingress-role-binding

namespace: ingress-space

resourceVersion: "1409"

uid: abd25bb3-4ae5-45c0-b1aa-c5c98b49c351

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-role

subjects:

- kind: ServiceAccount

name: ingress-serviceaccountapiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: "2021-07-25T07:23:17Z"

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

name: ingress-role

namespace: ingress-space

resourceVersion: "1408"

uid: 535a7ee6-aabd-4a9f-9144-2799ab1327c6

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader-nginx

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

2-4. Ingress Controller 자동 생성

kind: Deployment

metadata:

name: ingress-controller

namespace: ingress-space

spec:

replicas: 1

selector:

matchLabels:

name: nginx-ingress

template:

metadata:

labels:

name: nginx-ingress

spec:

serviceAccountName: ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --default-backend-service=app-space/default-http-backend

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

2-5. create service as nodePort

- kubectl -n ingress-space expose deployment ingress-controller --name ingress --port 80 --target-port 80 --type NodePort --dry-run=client -o yaml > ingress-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: ingress

namespace: ingress-space

spec:

type: NodePort

selector:

name: nginx-ingress

ports:

- port: 80

targetPort: 80

nodePort: 30080

2-6. create ingress resource at namespace :: app-space

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: tt

namespace: app-space

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /wear

pathType: Prefix

backend:

service:

name: wear-service

port:

number: 8080

- path: /watch

pathType: Prefix

backend:

service:

name: video-service

port:

number: 8080출처

- https://kubernetes.github.io/ingress-nginx/examples/

- https://kubernetes.github.io/ingress-nginx/examples/rewrite/

- https://kubernetes.io/docs/concepts/cluster-administration/addons/

- https://kubernetes.io/docs/setup/independent/install-kubeadm/#check-required-ports

- https://github.com/kubernetes/dns/blob/master/docs/specification.md

'클라우드 컴퓨팅 > 쿠버네티스' 카테고리의 다른 글

| Build k8s cluster on Raspberry Pi(Ubuntu) in the hard way (0) | 2022.03.28 |

|---|---|

| Build k8s Cluster on Raspberry Pi 4B(Ubuntu) through Kubeadm (0) | 2022.01.18 |

| Docker Network (0) | 2021.12.05 |

| Kubernetes Storage (0) | 2021.11.22 |

| Kubernetes Security - Authentication/Authorization (0) | 2021.11.10 |